Learning outcomes and assessment criteria in art and design. What’s the recurring problem?

Allan Davies, Independent Consultant

This paper is a critical reflection on the development of learning outcomes in art and design. It builds on a Learning and Teaching Support Network (LTSN) - funded project from 2003 that sought to provide support for colleagues seeking to make sense of the then new quality agenda. It seeks to identify recurrent issues and make a number of recommendations for further development.

Keywords: learning outcomes, assessment criteria, constructive alignment, art and design

Abstract

This paper is a critical reflection on the development of learning outcomes (LOs) in art and design. It builds on a Learning and Teaching Support Network (LTSN) - funded project from 2003 that sought to provide support for colleagues seeking to make sense of the then new quality agenda. It seeks to identify recurrent issues and make a number of recommendations for further development.

Around 10 years ago, I completed a project, funded by the then Learning and Teaching Support Network (LTSN) Art, Design and Communication Subject Centre, intended to provide support for art and design teachers who were developing learning outcomes and assessment criteria for their students (download the project report). My intention was to lay out the issues as clearly as possible and provide advice, particularly for new staff, on how outcomes and assessment might be related to the student experience. There was an underlying imperative to the project at the time which was the emergence of ‘learning outcomes’ as key features of learning in the new quality agenda established by the Quality Assurance Agency (QAA). Although outcome-led learning was not new to higher education, the utilisation of learning outcomes within the definition of quality learning was. This paper is my personal reflection on those issues based on work I have carried out subsequently both formally as research and informally in supporting institutions and departments in writing outcomes and assessment criteria.

There was emerging a good deal of literature surrounding outcome-led learning, particularly on how to write coherent outcomes within a skills context and, increasingly so, in a context in which the written form, such as essays and reports, was a predominant mode of evidencing achievement. There was less similar material on assessment, although the literature on assessment, particularly in secondary education, has a long history. There was, of course, even less literature on these topics within art and design.

I drew much of the material for the project from other sources. There was a broad consensus on the value of learning outcomes and how they might be articulated. There was an obvious concern to avoid ambiguity in the writing and presentation of learning outcomes with cautionary remarks and recommendations that I wove into the project, attempting, where possible, to highlight the challenges for art and design. Simultaneously, there emerged a most valuable critique of learning outcomes by Hussey and Smith (2002) that had the potential to derail the outcome juggernaut before it had developed any steam. Their comments, despite being equally relevant today, did not hamper the momentum that has led to learning outcomes being adopted in (almost) all HE institutions in the UK.

The design and use of assessment criteria has appeared less under the microscope largely because they have always been a requirement of the validation of courses in universities. Nevertheless, as the focus shifted towards student-centred learning, the literature began to pick up on broad issues such as the differences between norm-referenced and criterion-referenced assessment, the impact of assessment on learning, self- and peer assessment and so on.

John Biggs’ book, Teaching for Quality Learning at University (2003) became highly influential during this period and has been used subsequently as a key reader on most courses for new teachers in universities throughout the UK. In my paper, I drew heavily on Biggs, particularly his SOLO taxonomy as it was quite evidently, like Bloom’s taxonomy beforehand, a potential tool for developing and designing assessment criteria.

During this period, what became clear was that the developmental histories of learning outcomes and assessment criteria, for most institutions, were quite different.

Assessment criteria in most universities were derived from Bloom’s taxonomy (1956). The convenience of the structure of the taxonomy, as it related to the undergraduate degree classification system, meant that the categories could quite easily form the basis of the overall generic assessment strategy of universities. It wasn’t unusual, however, for departments to adapt the taxonomy to suit their disciplinary requirements or for universities themselves to insert extra levels to further differentiate the classes. Terminology, such as ‘good’, ‘very good’, ‘excellent’ and often ‘outstanding’, were, and still are, used to differentiate levels of competence although in themselves provided no insight into their meanings and how they related. Such terms are often used as qualifiers within level descriptors.

So, whilst the assessment policies, strategies and processes became embedded into the culture and social practices of universities and, hence, to be seen as a strong evidential basis for comparison of standards and quality between universities, learning outcomes, a much more recent phenomenon, had to find a way of fitting in.

Curiously, whilst the existence of learning outcomes and assessment criteria were deemed essential for a positive outcome during the QAA Subject Review process (1998-2000), no heed seemed to have been taken by almost half of the art and design subject community to ensure the clarity and use of assessment criteria.

Less than half (43 per cent) of providers' arrangements for the assessment of students are completely effective. Although students are assessed using a suitably wide range of methods, the majority of reports identify a number of sector-wide concerns with the assessment of students. These relate primarily to inconsistencies in the clarity and use of assessment criteria, and their relationship to published learning outcomes. The criteria for different assessment bands are not always sufficiently differentiated. Where generic criteria have been developed, these often fail to engage with subject-specific learning outcomes, and the reviewers also report inconsistencies in the grading of different levels of achievement. (QAA, 2000, Section 36, Teaching Learning and Assessment)

At the end of the report and by way of recommendation, the authors suggested ‘there is a widespread need to improve the definition of assessment criteria, to relate them to published learning outcomes and to use them consistently’ (QAA, 2000, Section 69, Conclusion).

At the time, this was not regarded as an issue peculiar to art and design. In 2000, the QAA published its ‘Code of Practice for the Assurance of Academic Quality and Standards in HE’ with Section 6 of the Code urging institutions to ensure that ‘assessment tasks and associated criteria are effective in measuring student attainment of the intended learning outcomes’ (Section 6: Assessment of Students).

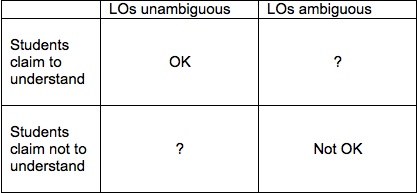

In a workshop at the Centre for Learning and Teaching in Art and Design (CLTAD) at the University of the Arts in 2003, organised to investigate this concern, 16 experienced reviewers considered what they felt was behind this difficulty. The conclusion reached is interesting in as much as the problem, as manifest in individual institutions, was revealed to arise not so much from the interrogation of the evidence base submitted to the QAA as from their seeking of the actual student experience. If student groups reported, during the review, that they indeed did know what was required of them on their course and they were clear about how and why they were being assessed, despite the evident lack of clarity of the documentation given to them, then there was no issue to answer. In these instances, it was accepted that there existed strategies set up for students to receive this information other than those revealed through the formal course documentation. This is important insofar as the student voice about their experience was seen as paramount. It was only in those instances where the students reported that they didn’t know what they had to do and how they were being assessed (despite published learning outcomes and assessment criteria) that reviewers could legitimately challenge the coherence of the outcomes and the criteria. All this gives rise to the following possibilities:

Figure 1

The ideal scenario is, of course, revealed in the top left sector. The bottom right sector represents the worst possible scenario. The other two sectors are interesting. The bottom left suggests that the course team has spent more effort developing outcomes than helping students to understand them. As Ferenc Marton said at the ‘Improving Student Learning Conference’ in Exeter (1995), ‘If a student doesn’t understand, then there is something we have taken for granted that we shouldn’t have taken for granted’. This seems to me a case in point.

In my time, I have witnessed all four of the above scenarios but it is the top right sector that intrigues me most. Our obsession with establishing the accuracy/clarity of learning outcomes in the belief that this an essential prerequisite for quality learning to take place is undermined by those courses in which the written learning outcomes are largely unclear but the students are performing well. A lack of clarity in the learning outcomes, it seems, does not mean that students are not clear about what they have to do. Indeed, learning outcomes, ambiguous or otherwise, appear to be no substitute for established learner support systems and other frameworks that help students understand what they have to do in order to successfully complete a programme of work. Briefs and briefings are familiar in art and design along with tutorials, interim crits and feedback forums. It is during these supportive scenarios that art and design students formulate their intentions and actions and come to understand what ‘imagination’, ‘creativity’, ‘risk-taking’, etc, (the very terms regarded as potentially ambiguous) actually mean for them.

I would argue that the insistence that learning outcomes should be sufficiently clear ‘to be measurable’ has not helped those subject areas, such as the creative arts, in which articulating outcomes that involve the development of intuition, inventiveness, imagination, visualisation, risk-taking, etc, is challenging. In terms of meaningfulness, they equate to the notion of ‘understanding’, a cognitive term which is regarded as too complex and which should be substituted by other, more measurable, terms such as, ‘explain’, ‘analyse’, etc. Another drawback in the use for these terms, acknowledged by Biggs (2003), is that they are regarded as ‘divergent’ and as such do not invite one appropriate answer but a range of possibilities.

An example of this is art and design students’ ability to visualise. This cognitive ability is a cornerstone of creative thinking. It requires the use of imagination and judgment and we expect all art and design students to develop it as they progress in their study. Fashion students develop their collections by visualising what comes next, furniture design students visualise their products individually, together and in a context, graphic design students visualise alternative layouts and so on.

Nevertheless, this concept is not easily captured in learning outcome form. It’s not the kind of thing that can be measured easily. It is, in fact, developed within the whole complex process of the practice and over time.

Constructive alignment, again Biggs’s notion (2003), attempts to tackle an important issue relating to the coherence of the programme of study and the student experience of it. There has to be a relationship between what a student is expected to do (the intended learning outcomes) on a programme, the programme content and delivery that supports the student’s achievement and the process and judgments by which the quality of the learning is determined (assessment criteria). For me, Rowntree raised this issue forty years ago; ‘to set the student off in pursuit of an un-named quarry may be merely wasteful, but to grade him (sic) on whether he catches it or not is positively mischievous’ (1977).

It would be odd for any teacher, nowadays, to dissent from the sentiment that students need to know what they are expected to learn on any given course or module. However, the over-specification of outcomes for the purpose of measurement can be counter-productive in art and design and other creative disciplines. The more specific the learning outcome is the more specific the ‘quarry’ will be and the greater the constraint on whether the most appropriate quarry is caught or not. This is not just seen to be true of creative subjects;

Limits to the extent that standards can be articulated explicitly must be recognised since ever more detailed specificity and striving for reliability, all too frequently, diminish the learning experience and threaten its validity. There are important benefits of higher education which are not amenable either to the precise specification of standards or to objective assessment (Price et al, 2008)...

Apart from the constraints of the use of language, students who take a strategic approach to learning are not likely to depart from the published words and the concrete world. For them, literal interpretation is paramount. However, art and design students are often in pursuit of a ‘quarry’ of which they are given only partial knowledge. Indeed, this can be a deliberate learning strategy in the creative arts. For art and design students, formulating and finding their own quarry is an essential part of the discovery process. They do, nevertheless, need to know the ‘landscape’ and the ‘boundaries’ when they are in full pursuit. It might be that these are better articulated in the form of a discourse than in specific outcome form and more usefully manifested in project briefings, team meetings, etc.

Students also need to know how well they have performed in ‘catching the quarry’. In the UK universities classification system, we require final judgments about student performance to be within one of five categories – 1st, 2.1, 2.2, 3rd and fail. This is often mirrored throughout courses by utilising grades rather than classes. Usually, assessment grades at module level reflect the system as a whole. Hence, grading systems use criteria to differentiate levels of performance. To satisfy both Rowntree and Biggs, therefore, we need to demonstrate the relationship (alignment) between the learning outcomes and the assessment criteria.

It is here that serious issues arise and often because of the dutifulness of teachers as they try to reconcile the language of learning outcomes with that of assessment criteria. Since the publication of the QAA Art and Design Overview Report in 2000, the difficulties identified in Section 36 continue to set challenges for teachers and administrators alike. Even more so given the observation and comments from the most recent QAA Institutional Audit Overview Report:

Most institutions have moved to a common assessment framework for the great majority of their degree programmes, and institutional as opposed to disciplinary or subject determination of assessment schemes is much more evident than hitherto. QAA (2011);

A finding that occurred more than once was that some programme specifications contained only generic learning outcomes and assessment criteria, so that subject-specific information was lacking and it was frequently not possible to determine from the programme specification any subject-specific assessment strategy. QAA (2011).

There is obvious uncertainty in the sector as to how generic and subject outcomes operate within the scheme of things. If they are overly generic they become less meaningful and it is unclear how the outcomes and assessment criteria relate to each other. If they are overly specific it becomes difficult for judgments about performance to be meaningful. It might be worth reflecting that the most accurate map of a region would have a one-to-one relationship with the terrain. That is, the map would be as big as the region and, hence, useless.

So, I suggest, in art and design whilst it is important that students know what they have to do on any course of study, it is not necessarily through published learning outcomes. Learning outcomes might be seen as necessary for administrative purposes but they are not sufficient in helping students develop an idea of what they will be learning and how they will go about it. Indeed, in a highly supportive context, learning outcomes might be so generalised as to only define the landscape and the boundaries of their intended learning. The knowing of what to do becomes developmental and personalised.

Observations

- The requirement that all learning outcomes should use terms, particularly verbs, which are ‘measurable’ creates more challenges that it resolves. To insist on using terms such as ‘identify’, ‘explain, ‘analyse’ and so on does not make the task of assessment any easier since explanations and analyses, etc, are discipline specific and are likely to be equally ambiguous for students who have not been yet been inducted into the language of the discipline.

- The expectation that outcomes can be specified accurately and measurably at each level of the student’s experience is fanciful. In art and design, teachers attempt to reconcile discipline benchmark statements, programme or course outcomes, stage-level outcomes, module outcomes and project outcomes, all within a coherent hierarchy using a limited set of verbs. Something has to give.

- In art and design, outcomes are not achieved once and that is it. They are regularly returned to within and beyond levels. Therefore, assuming that outcomes, once addressed, are completed does not reflect the ‘spiral’ nature of the pedagogy of the discipline.

- Designing and applying assessment criteria within an already overcrowded landscape of levels and outcomes can only add to student uncertainty.

- In art and design, students complete ‘projects’ that are often driven by briefs that reflect practice within the professional world. Sketchbooks, journals and other supporting material are often submitted to demonstrate evidence of their achievement. In most cases, this can be seen as ‘holistic’ assessment in which the full collection of submitted material contributes to the final judgment. Holistic assessment practices do not easily lend themselves to ‘analytical’ assessment in which individual elements are marked and then the scores added up.

Given these observations, how do we move forward? How do we overcome the emergent technocratic ideology of learning and assessment but at the same time aspire to the broad interests of quality regimes in which students understand what is required of them, that the standards within the discipline are transparent to external agents and judgments about student achievement are seen to be valid and reliable? And, most importantly, how do we prevent the bureaucracy of outcomes and assessment overwhelming both teachers and students?

Unless a teacher has been party to the design and development of a programme, he or she will not necessarily understand how what they are expected to do fits into the whole. Programme design in such a complex landscape is often a negotiation of the language that embraces it. Only the course designers have a real understanding of how things fit together. New or part-time teachers, for instance, have to take the module outlines at face value and make sense of them in terms of their own professional experience. The greater the number of outcomes and the more elaborate the assessment scheme the more likely the whole thing will be sidestepped. Common sense often prevails in these circumstances. Therefore, there is a virtue in keeping the outcomes to a minimum even if this means a loss of specificity and apparent ambiguity. Rather than measurability, the focus should be on meaningfulness. It is better to provide a structure for discussions with the students to enable them to begin to engage in the discourses of the community in which they are joining than to assume they understand how they will perform against ‘measurable’ outcomes. For this purpose, students only need to understand whether they have addressed / met the outcome or not. It should be the stage level descriptors and the assessment criteria which provide the evidence of achievement.

Writing assessment criteria that is meaningful in relation to the outcomes and student performance is equally challenging. Constructively aligning outcomes and criteria makes sense in the abstract but it is the actual practice of relating them that is challenging. Biggs (2003) acknowledges the difficulty that arises in a predominantly ‘divergent’ set of disciplines such as the creative arts. It is not unusual to see assessment criteria expressed in the same language as the outcomes thus making it impossible to differentiate them. The nature of the assessment criteria needs to differentiate different levels of achievement without resort to the terms already used by the outcomes. Bloom’s taxonomy is not helpful in this sense. Indeed, Bloom’s taxonomy (1956) replicates most of the terms used in essay and report writing. The tension is only revealed when Bloom’s taxonomy is used in a creative arts context where cognitive abilities other than knowledge and understanding are developed.

Biggs’s SOLO taxonomy (2003) is a helpful guide in that it is derived from research and is deliberately hierarchical with each level embracing the previous one. So a student who takes a ‘relational’ approach to a task will also demonstrate capabilities in utilising ‘multi-structural’ and ‘uni-structural’ approaches. The taxonomy identifies students’ concepts of, and approaches to, learning in an increasingly sophisticated way.

If we use the idea of a ‘nested’ hierarchy as the basis of the underlying quality structure for assessment in the way that Biggs does (and the way that Bloom’s taxonomy is seen to do), but use concepts derived from the discipline rather than appropriate the terms uncritically from Bloom or elsewhere, then we might go some way to resolving some of the tensions revealed in recent QAA Audit reports.

Below is a worked example from Graphic Design, with explanatory commentary, to illustrate a possible approach to aligning learning outcomes with assessment criteria and avoiding the recurring pitfalls:

Example

Programme outcomes

The following programme outcomes are based on the threshold standards as expressed in the QAA Subject Benchmark Statement for Art and Design (2008). It is important to make reference to the Statement, in particular, to the threshold standard descriptors which set out what is required of a student to be eligible for an award in art and design. At the point of graduation (i.e. at Stage 3), students must demonstrate that they have the requisite skills and abilities, as outlined, in order to gain at least a pass degree. Apart from further descriptors alluding to a ‘typical’ pass, there are no indicators of further ‘standards’ relating to the classification system. It is expected that these will be articulated through the institution’s / discipline’s own assessment criteria.

At the end of the programme you will be able to:

A. Apply Graphic Design principles, research methodologies and analytical judgment in the generation of your research material, ideas, concepts and proposals

B. Select and utilise appropriate materials, techniques, methods and media in the realisation of your work

C. Identify and critically evaluate your practice within the contemporary cultural, historical, social and professional contexts through the use of a range of presentation skills

D. Work both independently and collaboratively (QAA, 2008).

Stage learning outcomes for Graphic Design

The following descriptors are deliberately succinct. They represent a ‘nested’ hierarchy of expectations of all students at each level of study so that it is taken that Stage 3 embraces Stages 1 and 2. They also represent the context in which the level standards are set. The key questions here are, ‘What is it, in the broadest of terms, we want all students to be able to do at the end of the programme (Stage 3) and how do we get them there (Stages 1 and 2)?

Stage 1

Identify and utilise the skills and abilities of a graphic designer;

Articulate the focus of your work in relation to other specialisms;

Locate your work within a critical/theoretical and historical context.

Stage 2

Articulate your individual identity as a graphic designer;

Work independently and collaboratively;

Locate yourself and your work within broader issues and aspects of contemporary social practice.

Stage 3

Work effectively as a graphic designer.

Learning outcomes

The learning outcomes at module/unit level should seek to enable students to fulfill the Stage outcomes, again articulated as broadly as possible. Inevitably, unit briefs will combine creative expectations with more specific, often skill-based outcomes. The focus should be on meaningfulness rather than on measureability bearing in mind the support structures put in place to ensure that ‘we haven’t taken for granted what we shouldn’t have taken for granted’.

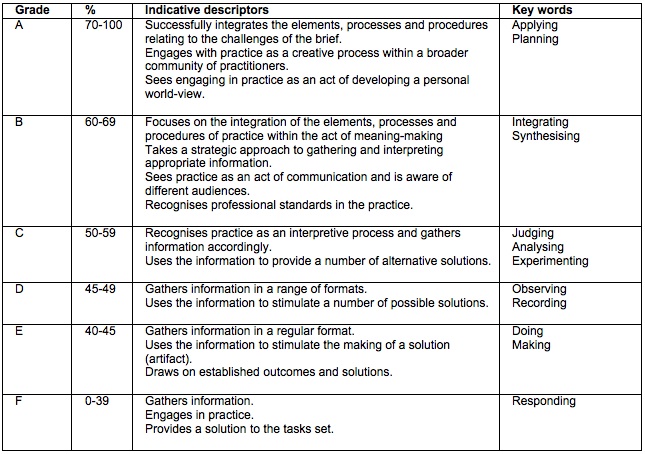

Assessment criteria and grading/marking scale

The following scale is indicative and will vary depending on the nature and nuances of the discipline. Again, the categories are nested with each one incorporating the one below it. The hierarchy is based on students’ abilities to integrate their thinking and progressively apply their thinking and abilities to more elaborate contexts. The scale can be applied at all three stages as the context and level of challenge is determined by the stage descriptor. Stage 3, for instance, is more challenging than Stages 1 and 2. As students progress they not only become more proficient in making and observing, say, they also get better at integrating these abilities and in more complex contexts. This is the essence of the ‘spiral’ curriculum.

Figure 2

The ‘key words’, which differentiate each level of achievement, in this instance, are based on the outcomes of an AHRB funded project (Davies and Reid, 2000 and Davies, 2006) which sought to identify discipline differences in art and design. What is important is that they are derived from the student experience of the subject. Other terms might be preferable but they should, ideally, be generated from observations of the structure of the learning outcomes of the discipline in context.

The act of assessment should be twofold. Firstly, determining whether the student has engaged with all the required learning outcomes (this is normally regarded as a pass). Then, using the assessment criteria, determining at what process level the student has been operating, given the full body of submitted work. This is not a question of adding up marks, moving from the specific to the general. It is more a question of determining an overall grade and justifying it with the available evidence against the criteria. Fine-tuning of the mark is negotiated afterwards.

As we have seen, the task of ‘mapping’ outcomes, curriculum and assessment can lead to a plethora of descriptors. In the search for accuracy and ‘clarity’, I would argue, the more we seek to map, paradoxically, the more the landscape becomes ambiguous if not for teachers then certainly for students. The use of matrices to generate assessment descriptors may appear methodical and structured but, in my experience, they produce far too many possibilities and lead to the analytical approach to assessment that Biggs, in particular, warns us off. They also attract the use of superlatives to differentiate the levels of achievement. At this point all is lost for the whole scheme becomes tautological.

Contact information

Biography

Allan Davies is a consultant in Higher Education Art and Design. Prior to this he was a Senior Adviser at the Higher Education Academy and before that he was Director of the Centre for Learning in Art and Design (CLTAD) at the University of the Arts. In the early days he was Head of Art and Design at what is now the University of Worcester and even further back he was programme leader for graphic information design at what was then Falmouth College of Art. During some of this he was a GLAD committee member. He is currently a QAA reviewer and was a member of the original QAA Subject Benchmark Statement for Art and Design panel.

References

Biggs, JB (2003) Teaching for Quality Learning at University, SRHE & OU Press.

Bloom, B.S. et al. (1956) Taxonomy of educational objectives: The classification of educational goals: Handbook I, Cognitive Domain, New York, David McKay.

Davies, A. and Reid, A. (2000) ‘Uncovering Problematics in Design Education: Learning and the Design Entity’, in Swann, C. & Young, E. (eds) Re-Inventing Design Education in the University: Proceedings of the International Conference, School of Design, Curtin University, Perth, pp.178- 184.

Davies, A (2006) ‘“Thinking like…” the enduring characteristics of the disciplines in art and design’, in Davies, A (ed), Enhancing Curricula, 3rd CLTAD Conference Proceedings, Lisbon.

Hussey, T. and Smith, P. (2002) ‘The trouble with learning outcomes’, Active Learning in Higher Education, 3(3), pp. 220-233.

Price, M. O’Donovan, B. Rust, C. and Carroll, J. (2008) 'Assessment standards: a manifesto for change', Brookes eJournal of Learning and Teaching, Vol.2, No. 3.

QAA (2000) ‘Art and Design Subject Overview Report 1998/2000’.

QAA (2008) ‘Subject Benchmark Statement: Art and Design’.

QAA (2011) ‘Outcomes from Institutional Audit (2007-09): Assessment and Feedback’, 3rd Series.

Rowntree, D. (1987), Assessing students: how shall we know them? London, Kogan Page (revised edition)

Figures 1 and 2 supplied by Allan Davies

Listing and header images: sections from photo by Paul Clark